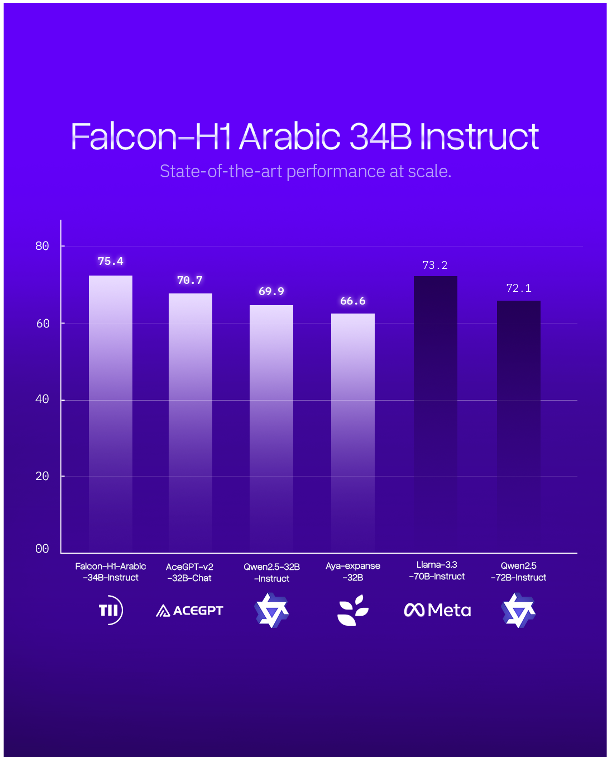

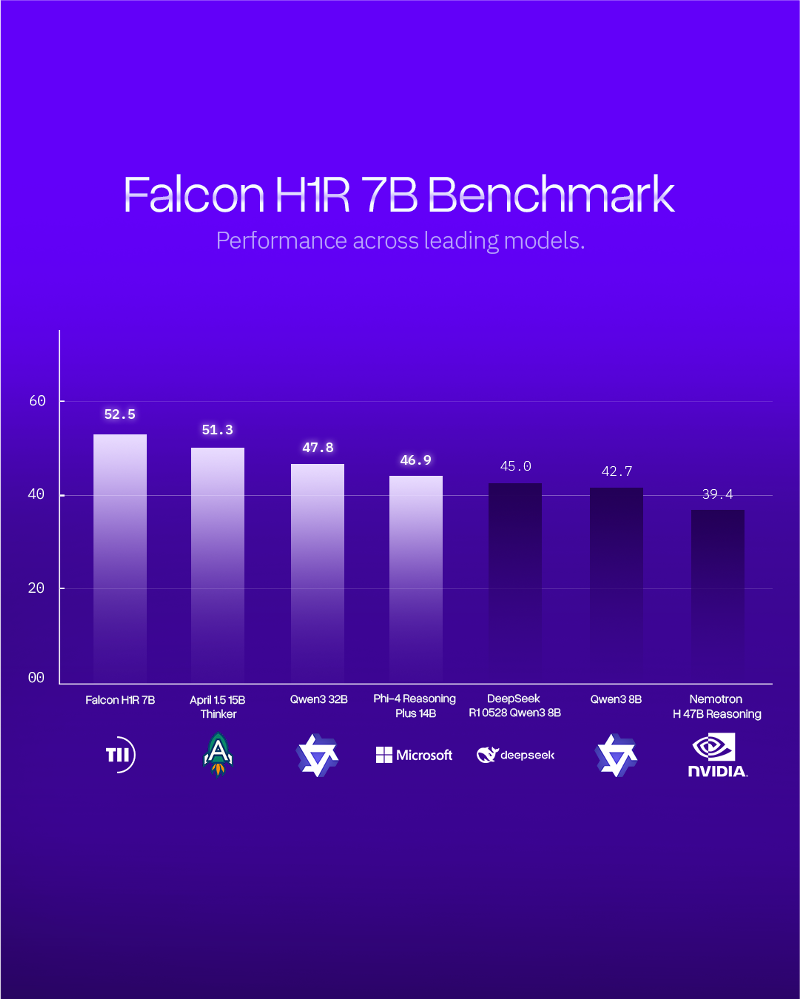

The Technology Innovation Institute (TII), part of Abu Dhabi’s Advanced Technology Research Council (ATRC), has announced the release of Falcon H1R 7B, an artificial intelligence model designed to deliver strong reasoning performance using fewer computing resources.

Falcon H1R 7B contains 7 billion parameters and focuses on practical deployment needs such as speed, memory use, and energy demand. Independent testing shows the model competing with and often outperforming larger open-source models from Microsoft, Alibaba, and NVIDIA. The release reflects TII’s research direction and the UAE’s growing role in global AI development.

His Excellency Faisal al Bannai, Adviser to the UAE President and Secretary General of the Advanced Technology Research Council, said “Falcon H1R reflects the UAE’s commitment to building open and responsible AI that delivers real national and global value. By bringing world-class reasoning into a compact, efficient model, we are expanding access to advanced AI in a way that supports economic growth, research leadership, and long-term technological resilience.”

Improving Reasoning at Runtime

Falcon H1R 7B builds on the Falcon H1-7B model and introduces a hybrid Transformer–Mamba architecture. This structure allows the model to process tasks faster while preserving reasoning quality. The training process focuses on test-time reasoning, which improves performance during real use.

“Falcon H1R 7B marks a leap forward in the reasoning capabilities of compact AI systems,” said Dr Najwa Aaraj, CEO of TII. “It achieves near-perfect scores on elite benchmarks while keeping memory and energy use exceptionally low, critical criteria for real-world deployment and sustainability.”

Researchers describe this capability as latent intelligence. It allows the model to handle complex reasoning tasks without relying on scale alone. The result is a balance between speed, accuracy, and resource use.

Performance Results

Falcon H1R 7B delivered strong results across several benchmark categories:

In mathematics testing, the model scored 88.1% on AIME-24, exceeding the result of ServiceNow AI’s Apriel 1.5 (15B).

In coding and agent-based benchmarks, Falcon H1R 7B achieved 68.6% accuracy. It ranked highest among models below 8B parameters and exceeded results from some larger models on LCB v6, SciCode Sub, and TB Hard.

In general reasoning tasks, the model performed at a similar level to larger systems such as Microsoft’s Phi 4 Reasoning Plus (14B), despite using fewer parameters.

In efficiency tests, Falcon H1R 7B reached speeds of up to 1,500 tokens per second per GPU at batch size 64. This was close to twice the speed of Qwen3-8B, enabled by its hybrid architecture.

“This model is the result of world-class research and engineering. It shows how scientific precision and scalable design can go hand in hand,” said Dr Hakim Hacid, Chief Researcher at TII’s Artificial Intelligence and Digital Research Centre. “We are proud to deliver a model that enables the community to build smarter, faster, and more accessible AI systems.”

Open Release and Ongoing Research

TII has released Falcon H1R 7B as open source under the Falcon TII License. The model is available on Hugging Face alongside a detailed technical report covering training methods and benchmark outcomes.

This release continues the Falcon programme’s track record. Previous Falcon models have ranked highly in global benchmarks across several generations. Together, these results show how smaller models can deliver strong performance and support the UAE’s position in advanced AI research.